How trained teams, verified workflows, and multi-layer review systems deliver reliable, high-quality data— from our CEO’s desk.

High-quality labeled data is the bedrock of any production-ready AI system. Yet one of the most common places projects fail is not in model architecture or compute — it’s in annotation. I say this from experience: at FYT Localization we’ve worked with diverse teams across languages, modalities, and industries, and we keep returning to the same truth — quality at scale requires structure.

This article explains the practical framework we use to deliver dependable annotation for large projects: clear guidelines, trained role-based teams, verified workflows, multi-layer review, and continuous feedback. I’ll share examples from voice, medical, fintech and humanitarian datasets so you can see how this plays out in the field.

Why annotation without structure breaks things

When annotation is treated like a one-off task, three problems quickly appear:

- Inconsistency. Two annotators interpret the same instruction differently.

- Drift. Over time, annotators deviate from the original standard.

- Opacity. You can’t explain why a label was applied — you can only guess.

Unstructured work means models learn noise. Structured coordination turns a group of contributors into a reliable, repeatable team. That’s what clients expect and what we deliver.

The five pillars of production-grade annotation

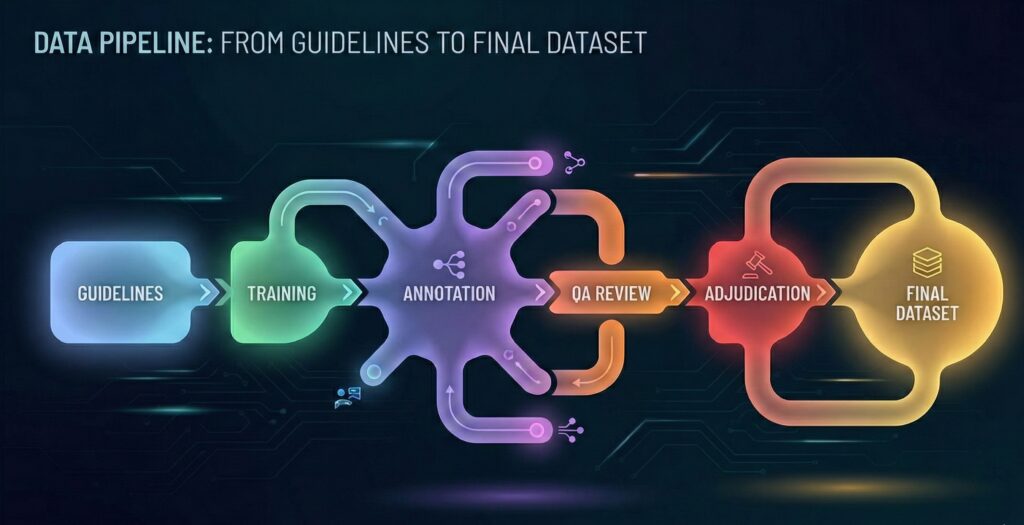

From our projects, five elements consistently predict success. We apply them to every large program.

1. Plain-spoken, versioned guidelines

Guidelines are the single most important artifact. They must be:

- Short, clear, and example-rich (include ambiguous edge cases).

- Versioned with change logs so you can track why decisions were made.

- Paired with quick decision trees and “do / don’t” examples.

How we use them: for every new task we publish a one-page quick guide and a longer reference. Annotators start with the one-pager; the reference is used when they need depth.

2. Trained, role-based teams — not one-person armies

Role clarity matters more than headcount. Our core roles are:

- Project manager: client liaison and timeline owner.

- Annotation lead / trainer: runs calibration and handles edge cases.

- Annotators: the workforce doing labels. We prefer long-term contractors or full-time annotators for continuity.

- Quality reviewers / adjudicators: resolve disputes and enforce standards.

- Subject-matter experts (SMEs): involved where precision is critical (medicine, law, finance).

Practical note: during onboarding each annotator completes a qualification test based on pilot data. If they don’t pass, we coach and retest.

3. Pilot runs and measured ramp-up

Never jump straight to production. Always pilot.

Our pilot approach:

- Annotate a representative sample (500–2,000 items depending on modality).

- Measure inter-annotator agreement and identify guideline gaps.

- Update the guideline and re-calibrate the team.

- Only then scale.

Pilots expose the real challenges — unusual phrasing, dialectal variance, or tool limitations — so you don’t pay to fix avoidable problems later.

4. Multi-layer QA and adjudication

A single pass is rarely enough. Our multi-layer QA typically includes:

- Automated checks for format, missing fields, or impossible values.

- Random spot checks by reviewers to catch systemic issues.

- Duplicate labeling on a percentage of items to measure agreement.

- Adjudication by a senior reviewer or SME for disagreements.

We set the dual-annotation rate by project risk: 5–10% for general tasks and higher for safety-critical ones.

5. Continuous feedback and meaningful KPIs

We track metrics that matter: inter-annotator agreement (IAA), reviewer rejection rates, throughput per annotator, and drift over time. Those numbers are fed back into short quality standups and retraining sessions.

Why it’s important: data quality is not static. Teams improve rapidly with timely, specific feedback.

Tools and infrastructure that make life easier

Good tooling reduces human error and preserves provenance:

- Choose annotation platforms that support role management, versioned guidelines, audit logs and review workflows.

- Export metadata (annotator ID, timestamps, guideline version) so every label is traceable.

- Integrate corrected labels back into model training pipelines (active learning loops) to improve future annotation efficiency.

Security and privacy matter too: apply encryption, access controls, and retention policies when projects touch sensitive data.

Data governance and ethics — non-negotiable

Large projects often involve personal or sensitive content. Our commitments:

- Minimize data retention; anonymize where possible.

- Apply strict access controls and logging.

- Use consented or synthetic datasets when real data is too risky.

- Involve compliance and SMEs early for health, legal or regulated domains.

Treat governance as part of the workflow — not an afterthought.

Sector examples — how structured coordination looks in practice

Below are practical examples we’ve used to get clean, production-ready datasets.

Voice datasets for African languages

Challenge: many dialects, code-switching, unclear punctuation.

Approach: pilot with 1,000 utterances per dialect, use simple illustrated guidelines for hesitations and code-switching, run local annotator training, and dual-annotate 10% for IAA. Linguists adjudicate disputes.

Outcome: a clean ASR training set suitable for robust speech recognition in low-resource languages.

Medical image labeling for triage systems

Challenge: labels can affect clinical decisions.

Approach: two-stage workflow — annotators mark regions and severity; SMEs verify and grade. Maintain a verified gold set for drift checks.

Outcome: dataset defensible for clinical trials and model validation.

Fintech transaction semantics

Challenge: local terms and regulatory nuance vary by country.

Approach: build a glossary of approved financial terms per market, require Full SME review for any legal or compliance copy, and test critical flows with pilot users.

Outcome: onboarding language that reduces support tickets and increases successful conversions.

Cost vs. value — plan realistically

High-quality annotation requires investment, but costs scale predictably with process:

- Budget onboarding as non-billable time early on.

- Expect higher QA and SME hours for high-risk projects.

- Include tooling and secure storage in forecasts.

The return on investment is real: cleaner labels reduce model iterations, lower production errors, and avoid costly rollbacks. Often a small quality increase yields outsized model gains.

A kickoff checklist you can use today

Copy this into your next project brief:

- Publish a concise, example-rich guideline and version it.

- Run a pilot (500–2,000 items) and measure IAA.

- Recruit and train annotators; use qualification tests.

- Implement multi-layer QA (automated checks, spot checks, dual annotation).

- Track KPIs (IAA, rejection rate, throughput) and hold weekly quality standups.

- Keep a gold/reference set for continuous validation.

- Ensure privacy and governance controls are in place.

- Budget SMEs and longer review cycles for high-risk data.

A personal note from our desk

When we first scaled a voice dataset across four languages, the data looked promising but our early models failed in production. That failure taught us something invaluable: no amount of model sophistication substitutes for consistent, human-driven labeling.

Since then, FYT Localization has refined its approach around the five pillars above. We don’t treat annotation as a checkbox — we treat it like building a product. We design, pilot, test, measure, and iterate. The result is data our clients can trust in production.

If your team is planning a large annotation project, start with structure. If you already have data, test it against a small gold set and measure agreement. The results will tell you what to fix first.

Ready to get started?

Large-scale annotation can feel like a mountain. With the right team and the right process, it becomes repeatable — and predictable. If you’d like, FYT Localization can:

- run a pilot and produce a clean gold set;

- design customized guidelines and glossaries;

- build and manage annotation teams;

- implement multi-layer QA and regular reporting.

Contact us today at FYTLOCALIZATION and we’ll walk through a tailored plan for your data, timeline, and risk profile. Let’s make your labels a competitive advantage, not a production risk.